Why You Need A Sitemap

Creating a sitemap is crucial in enhancing your website's SEO, as it helps search engines crawl and index your site more efficiently.

If you have tried using any online free sitemap generators to create a sitemap, you may have experienced some success if you have a small website, but they will mostly ask you to trade your email for the sitemap.

Our SEO Expert, Joe, has created an excellent sitemap maker for you using Python. If you don't know how to run Python code, don't worry. I will make it very easy for you.

You will not need to install anything on your computer to use this free XML sitemap generator

You will also not need to visit any sitemap generator website.

We'll show you how to use a simple Python script (that we will give you for free) on Google Colab (a free Google tool for running Python code) to generate a sitemap for your website.

That gives you a powerful sitemap builder in your hands for free.

You can then create an XML sitemap online for free and download it to your computer.

What Does the Python Code Do?

The Free sitemap tool we’ll use performs the following actions to generate a sitemap for any website:

It will create a sitemap from the URL you will provide when prompted.

Fetches All Links: It starts by fetching all internal links from the provided website URL

Crawls the Website:

The script then crawls the entire website, collecting links and storing information about each link's source and surrounding HTML snippet.

Generates XML Sitemap:

As it crawls, the script generates an XML sitemap with URLs, their last modification date, and priority.

Saves and Cleans Up:

After completing the crawl, the script saves the sitemap and cleans up any temporary files.

Sorts Alphabetically:

After cleaning up, the script will rearrange your sitemap entries alphabetically, making it easy to find links in the sitemap.

Provides Snippets:

After crawling, the script will give you snippets of code around where each URL was found to ensure you can quickly troubleshoot broken links.

Here is the code: Copy the free sitemap builder code, and I'll show you where to paste it.

Free Online Sitemap Generator Code

import requests

from bs4 import BeautifulSoup

from urllib.parse import urljoin, urlparse

import xml.etree.ElementTree as ET

import xml.dom.minidom as minidom

import os

from datetime import datetime

def get_all_links(url, domain):

try:

response = requests.get(url)

if response.status_code != 200:

print(f"Failed to retrieve {url} (status code: {response.status_code})")

return [], {}, {} # Return three empty values

soup = BeautifulSoup(response.text, 'html.parser')

links = set()

link_sources = {}

link_html_snippets = {}

for link in soup.find_all('a', href=True):

href = link.get('href')

full_url = urljoin(url, href)

parsed_full_url = urlparse(full_url)

if parsed_full_url.netloc == domain:

clean_url = parsed_full_url._replace(fragment='').geturl()

links.add(clean_url)

link_sources[clean_url] = url # Store the source URL

# Store the HTML snippet around the link

link_html_snippets[clean_url] = link.parent.prettify()

return list(links), link_sources, link_html_snippets

except Exception as e:

print(f"Failed to get links from {url}: {e}")

return [], {}, {} # Return three empty values in case of an exception

def append_to_sitemap(page_url, urlset):

url_element = ET.SubElement(urlset, 'url')

loc = ET.SubElement(url_element, 'loc')

loc.text = page_url

lastmod = ET.SubElement(url_element, 'lastmod')

lastmod.text = datetime.now().strftime('%Y-%m-%dT%H:%M:%S+00:00')

priority = ET.SubElement(url_element, 'priority')

priority.text = '1.00'

def save_state(to_crawl, crawled, to_crawl_file, crawled_file):

with open(to_crawl_file, 'w') as f:

for url in to_crawl:

f.write(url + '\n')

with open(crawled_file, 'w') as f:

for url in crawled:

f.write(url + '\n')

def load_state(to_crawl_file, crawled_file):

to_crawl = set()

crawled = set()

if os.path.exists(to_crawl_file):

with open(to_crawl_file, 'r') as f:

to_crawl = set(f.read().splitlines())

if os.path.exists(crawled_file):

with open(crawled_file, 'r') as f:

crawled = set(f.read().splitlines())

return to_crawl, crawled

def crawl(url, domain, urlset, to_crawl_file, crawled_file):

to_crawl, crawled = load_state(to_crawl_file, crawled_file)

if not to_crawl:

to_crawl = set([url])

link_sources = {} # Dictionary to store where each link was found

link_html_snippets = {} # Dictionary to store HTML snippets around each link

while to_crawl:

current_url = to_crawl.pop()

if current_url not in crawled:

print(f"Crawling: {current_url}")

crawled.add(current_url)

append_to_sitemap(current_url, urlset)

new_links, sources, snippets = get_all_links(current_url, domain)

new_links_set = set(new_links)

to_crawl.update(new_links_set - crawled)

link_sources.update(sources) # Update the source dictionary with new links

link_html_snippets.update(snippets) # Update the HTML snippets dictionary

save_state(to_crawl, crawled, to_crawl_file, crawled_file)

print(f"Discovered {len(new_links)} new links, {len(crawled)} total crawled.")

print(f"To crawl: {len(to_crawl)} URLs remaining.")

# Print the source and HTML snippet of each link

for link, source in link_sources.items():

print(f"Link: {link} was found on: {source}")

print(f"HTML snippet:\n{link_html_snippets[link]}\n")

return crawled

def remove_duplicates(urlset):

unique_locs = {}

urls_to_remove = []

for url in urlset.findall('url'):

loc = url.find('loc').text

if loc in unique_locs:

urls_to_remove.append(url)

else:

unique_locs[loc] = url

for url in urls_to_remove:

urlset.remove(url)

def strip_namespace(tag):

return tag.split('}', 1)[-1]

def clean_namespace(urlset):

for url in urlset.findall('.//'):

for elem in url:

elem.tag = strip_namespace(elem.tag)

def ensure_scheme(url):

if not urlparse(url).scheme:

return 'https://' + url

return url

def sort_urls(urlset):

url_elements = urlset.findall('url')

url_locs = [(url.find('loc').text, url) for url in url_elements]

sorted_url_locs = sorted(url_locs, key=lambda x: x[0])

sorted_root = ET.Element('urlset', xmlns="http://www.sitemaps.org/schemas/sitemap/0.9")

for loc, url in sorted_url_locs:

sorted_root.append(url)

return sorted_root

def pretty_print_xml(elem):

rough_string = ET.tostring(elem, 'utf-8')

reparsed = minidom.parseString(rough_string)

return reparsed.toprettyxml(indent=" ")

def create_sitemap(url):

url = ensure_scheme(url)

parsed_url = urlparse(url)

domain = parsed_url.netloc

print(f"Starting crawl of: {url} with domain: {domain}")

to_crawl_file = "to_crawl.txt"

crawled_file = "crawled.txt"

urlset = ET.Element('urlset', xmlns="http://www.sitemaps.org/schemas/sitemap/0.9")

urls = crawl(url, domain, urlset, to_crawl_file, crawled_file)

print(f"Total URLs crawled: {len(urls)}")

remove_duplicates(urlset)

clean_namespace(urlset)

sorted_urlset = sort_urls(urlset)

sitemap_file = f"{domain.replace('.', '-')}-sitemap.xml"

with open(sitemap_file, 'w', encoding='utf-8') as f:

f.write(pretty_print_xml(sorted_urlset))

# Clean up state files after crawling

if os.path.exists(to_crawl_file):

os.remove(to_crawl_file)

if os.path.exists(crawled_file):

os.remove(crawled_file)

print(f"Sitemap has been created and saved as {sitemap_file}")

if __name__ == "__main__":

website_url = input("Enter the website URL to crawl: ")

create_sitemap(ensure_scheme(website_url))

Running the Python Code on Google Colab

Google Colab is a free cloud-based service that allows you to run Python code without any setup.

Follow these steps to create your sitemap using Google Colab:

- Access Google Colab: Go to Google Colab and sign in with your Google account.

- Create a New Notebook: Click on "New Notebook" to create a new Python notebook.

- Copy and Paste the Script: Copy the provided Python code and paste it into a new cell in your notebook.

- Install Dependencies: All dependencies will most probably be installed. But to be careful, before running the code, ensure all required libraries (requests, BeautifulSoup, etc.) are installed by adding the following lines at the top of the notebook:

!pip install requests beautifulsoup4

- Run the Code: Press the "Run" button (the play button at the top left of your pasted code) or use Shift + Enter to execute the script. Scroll down to the end of the code.

- Input Your Website URL: Enter the URL you wish to crawl when prompted. Like 'joeseo.co.ke'

- Download the Sitemap: After the script finishes, the sitemap will be saved as [your-domain]—sitemap. XML. You can download it directly from the notebook by clicking on the file in the sidebar.

Before ending its work, the script will tell you where each link was found by providing the code surrounding each discovered link.

This makes it easy for you to troubleshoot broken links and remove/correct them on your website.

Detailed Explanation of the Python sitemap generator Code

get_all_links(): This website sitemap generator fetches all hyperlinks from a given URL, filtering them to include only those within the same domain.

append_to_sitemap(): Adds each crawled URL to the XML sitemap with a timestamp and priority.

crawl(): Recursively visits each link, building the sitemap and saving the crawl state.

remove_duplicates(): Ensures no URL is listed more than once in the sitemap.

create_sitemap(): Orchestrates the whole process, from starting the crawl to saving the final sitemap file.

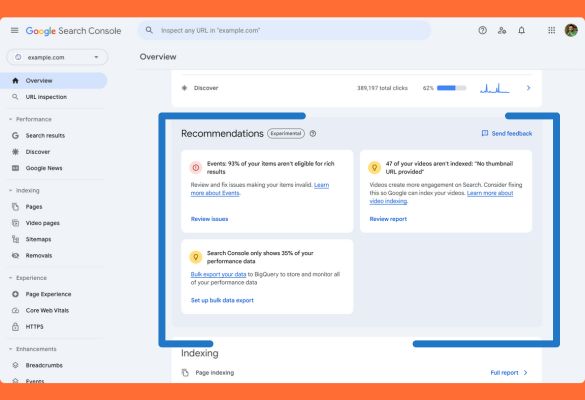

Conclusion

Following these steps, you can easily create a sitemap generator for your website using Python on Google Colab.

This approach requires no local setup and is completely free, enabling anyone with an internet connection to create a sitemap online.

Ensure to check your sitemap for accuracy and submit it to search engines to boost your SEO efforts.